Sensor fusion technologies are used to achieve situational awareness of a machine’s or vehicle’s surroundings. This awareness is built on various streams of data (sensing technologies) from the environment and it serves as a basis for autonomous navigation for vehicles and vessels. Sensor fusion offers a wide range of solutions that are also used in risk avoidance (e.g. collision warning), logistics optimization (e.g. tracking arrivals to a harbor), and industrial processes (e.g. quality control).

There are two ways to approach sensor fusion: with classic mathematics and statistics, or with deep-learning models. Classic sensor fusion leverages techniques like Kalman filters, Gaussian processes, and Bayesian methods (read our more technical blog article that dives into these). Nowadays AI allows for a modern take on sensor fusion, where neural networks filter and fuse the noisy sensor data. While deep learning can achieve superior levels of performance, success depends on how good of a data pipeline is built, and of course on the MLOps solution that orchestrates it all.

In this article, I will cover the most common use cases I’ve seen for sensor fusion, and gather some of my own experiences in working with modern AI-driven sensor fusion techniques in the maritime and automotive industries.

Sensor fusion combines sensing technologies to gain situational awareness

To put it short, sensor fusion is the probabilistic mix of data from multiple sources. Examples of sensors include cameras, radars, lidars, sonars, accelerometers, magnetometers, gyroscopes, altimeters, satellite navigation, and several others. The purpose is to reduce the uncertainty of the measurements coming from these various devices and increase the robustness of the estimations by combining the strengths of different sensing technologies. Without different streams of data, it would be impossible for the machine to “sense” its environment reliably, in other words, to have situational awareness.

I think you’ve guessed it already: the ultimate example of sensor fusion is the human body. Our sight, hearing, smell, touch, pressure, sense of balance, and other signals are fused by our nervous systems to enable our everyday life. Activities like running or going upstairs are only easy because our internal sensor fusion is truly impressive. As you may have seen, it takes decades of R&D to teach a robot to dance a few simple steps.

From Apollo to self-driving cars: understanding the environment

As a general mathematical method, sensor fusion is quite pervasive and widespread: the Apollo landing on the moon, the GPS navigation in our phones, the active suspension in cars, timing the arrival of trains, the trends in macroeconomics, weather forecasting with multi-band satellite data... Even the office (or your home office) could be using simplistic sensor fusion to modulate the lights and AC.

Sensor fusion excels at modeling motion under uncertainty. This is why the military was an early adopter: knowing the units’ positions on a hazy battlefield grants strategic advantage. When it comes to civilians and society, the best-known application is navigation systems such as the aforementioned GPS.

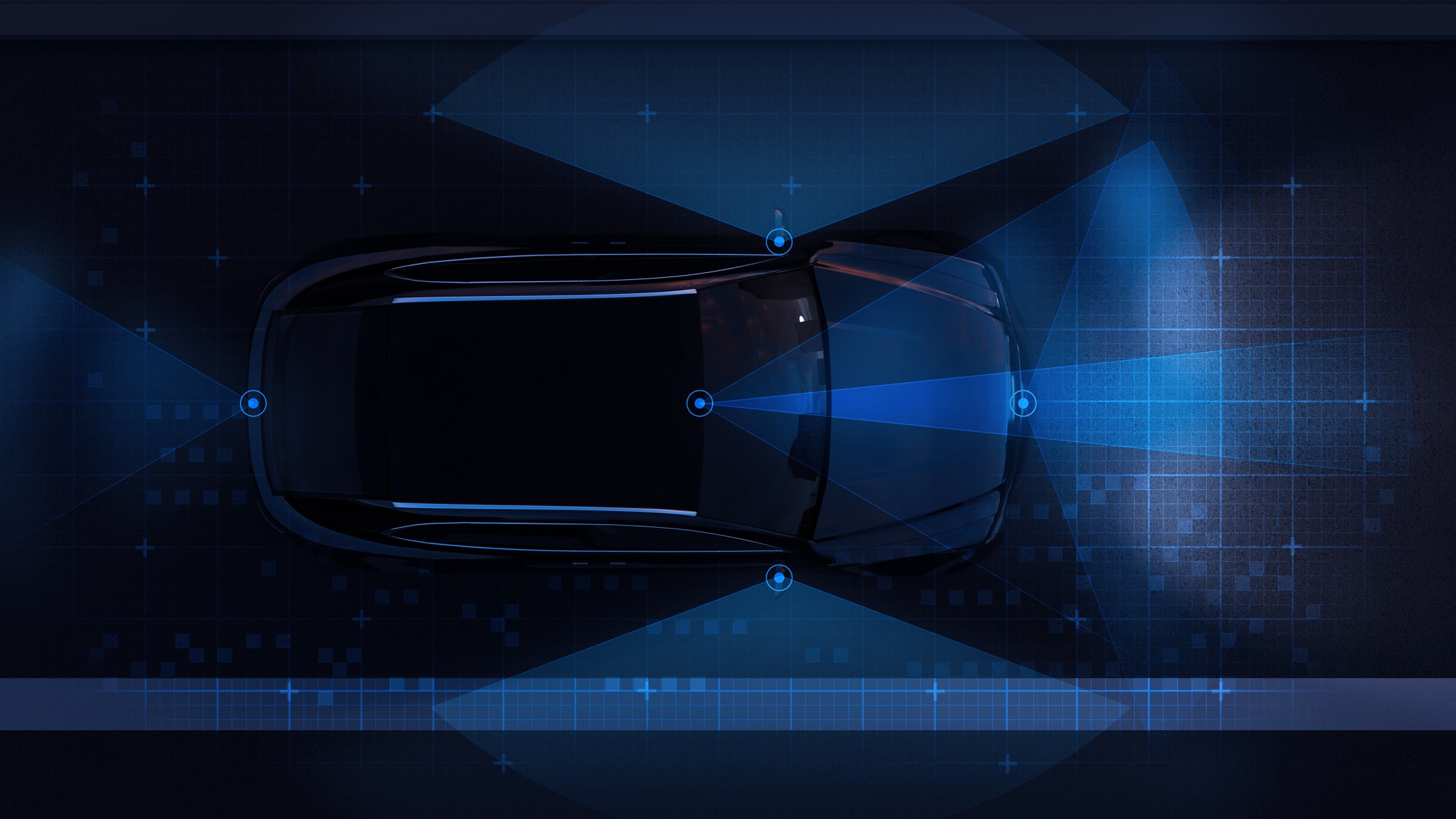

In the context of AI, sensor fusion is seen as the enabling technology behind autonomous vehicles and vessels. Eventually, sensor fusion will power the autonomous revolution in transportation. More specifically, sensor fusion is concerned with solving the problems of localization, navigation, detection, and tracking. These are the key building blocks to achieve situational awareness, which enables the vehicle to comprehend the surrounding environment in time and space so that it can operate autonomously.

Until we get there, today we already benefit from early levels of automation such as adaptive cruise control (ACC), parking assistance, collision warning, lane keeping, emergency braking, and others. However, these advanced driver-assistance systems (ADAS) features are only as good as the underlying sensor fusion technology. For example, ACC will have erratic behavior if the velocity and distance to the vehicle ahead aren’t tracked consistently. On the other hand, ships and aircraft have enjoyed dependable autopilots for several decades now, mainly due to their relatively easier navigation at sea and air.

Other modern uses for sensor fusion include applications in healthcare, such as wearables for health monitoring, industrial automation, such as robotic arms, warehouse robots, monitoring machinery, and IoT to optimize energy consumption.

Real-world application: my work in autonomous vehicles for maritime and automotive

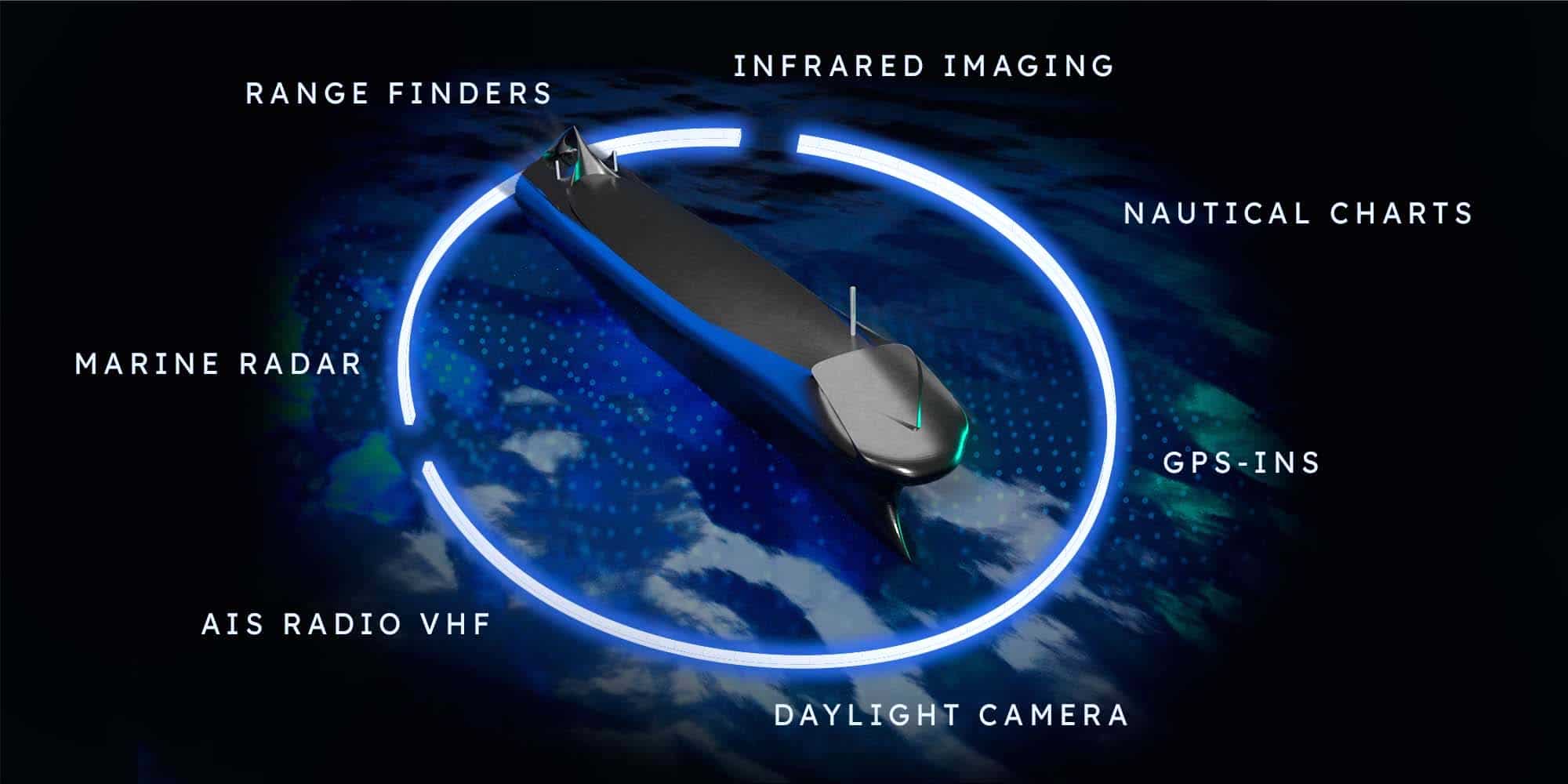

I’ve recently focused on building sensor fusion in maritime and autonomous industries. While the goal of these two areas is similar – fusing multiple sensors to achieve situational awareness and, ultimately, to enable autonomy – the challenges are different. The automotive environment requires split-second decisions to avoid and mitigate accidents. If the average human driver reacts in about half a second, a self-driving car must sense, plan and act at least as fast. Marine vehicles move slowly but steadily, which requires precise rather than fast decisions performed by the system. A significant miscalculation could mean that a large container ship is fated to wreck with its cargo in a few minutes, regardless of efforts.

The differences also extend to the types of sensors we use. Lidars are used to examine the surface of the ground and are crucial to most driverless car prototypes because they measure depth independently of ambient lighting. Lidars are used to discern obstacles from the ground and are crucial to most driverless car prototypes because they measure depth independently of ambient lighting. However, this sensing method is not as useful in maritime due to the distances involved. Radars are the all-around sensor for open sea navigation, while their use in urban environments is very challenging due to echoes and reflections. On the road, only cameras can read the traffic signs, although an autopilot could query the speed limits from a high-definition map (which to that matter should be considered a sensor too). In the sea, cameras need to look out at far nautical distances, which makes even the smallest misalignment a serious problem when estimating the position and movement of visual objects.

Another interesting difference I’ve seen in my work between maritime and autonomous is in motion dynamics. Cars drive on asphalt, concrete, and other hard driveways that provide good traction and steady control of the direction. Vessels drift in the water due to low friction and the force of the wind, which makes the course over the ground differ from the direction of the heading. These unique dynamics need to be considered carefully when designing sensor fusion solutions such as Kalman filters.

Unlocking the many benefits of sensor fusion

As I’ve described in the article, sensor fusion has many benefits that allow features that wouldn’t otherwise be possible. With more accurate data and increased sharing of data, we are able to build vehicles that make better decisions as they understand the situation they’re in better.

Fields like smart industry, smart vehicles, and smart cities, including transportation, are the main areas where I believe AI/ML-driven approaches to sensor fusion will enable significant changes to the vehicles and ultimately, improve the way we live.

About

Want to discuss how Silo AI could help your organization?

Join the 5000+ subscribers who read the Silo AI monthly newsletter to be among the first to hear about the latest insights, articles, podcast episodes, webinars, and more.

.png)

.png)